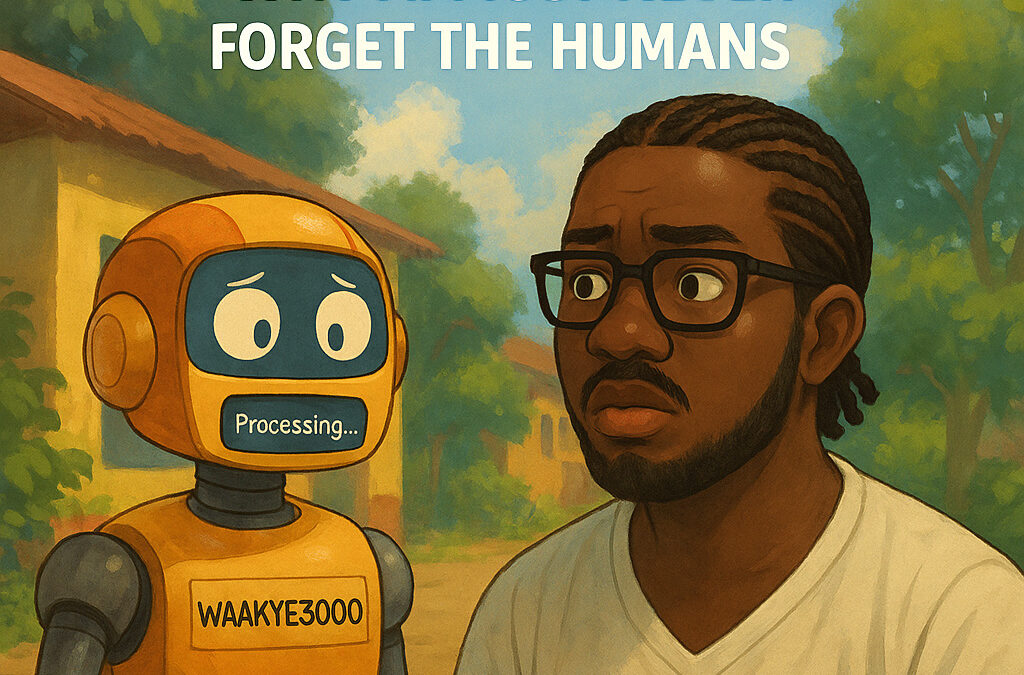

By Theophilus Atuahene Adu a.k.a. IyamAtuahene Slam Poetry Organizer & Advocate | PR & Marketing Strategist | Transforming Communities through Arts & Social Impact Imagine this:

one fine Harmattan morning in Accra, your favourite waakye vendor has been replaced by a shiny AI robot named Waakye3000. No sass, no banter, just “Processing beans-to-rice ratio… Please wait.” You stand there, nostalgic, missing the human error of an extra egg or the humble apology for being late. This is what happens when we build AI without building in humanity. Now let’s talk facts. Yes, Artificial Intelligence has changed the game from automating banking systems to diagnosing diseases faster than you can say “Korle-Bu.”

We love the productivity, the efficiency, and how it does more with less. But here’s where the kokonsa enters: what if AI starts doing less for us, and more to us? Without safety nets—ethical codes, laws, human-centric design AI could easily become the sakawa version of innovation: clever but dangerous. Self-trained, unsupervised, and drunk on data, some AI systems are already making decisions that influence human lives from who gets a loan in Kasoa to who’s flagged by facial recognition at Kotoka.

Let’s bring in the OG of robot wisdom: Isaac Asimov. His Three Laws of Robotics read like a Bible for AI developers:

1. A robot may not harm a human or allow a human to come to harm.

2. A robot must obey human orders unless it clashes with Law 1.

3. A robot must protect itselfas long as it doesn’t conflict with Law 1 or 2.

Sounds basic, right? But like most laws in Ghana, it’s the implementation that’s the problem. Because if we’re not careful, one day, your phone’s AI might ghost you because it thinks you’re “toxic.” Now, before you laugh this off as sci-fi, let’s break it down with a little trotro logic. You wouldn’t buy a new V8 without brakes.

So why are we building AI without moral brakes? Why create systems that outthink us but can’t outfeel us? Imagine a world where your smart fridge locks you out because your BMI isn’t “ideal” and it reports you to NHIS. In Ghana, where communal living, empathy, and storytelling are part of our fabric, AI must adapt to us, not the other way around. It must be trained in our values: ubuntu, respect, and that small sense of humor that even our President uses to survive press conferences. We must code compassion, program protection, and encrypt empathy.

AI should be a helper, not a high-tech overlord. It should serve the species that created it not sideline it for being “inefficient.” So yes, I firmly advocate that the protection of the human species and the dignity of the individual must be built into all AI systems whether they’re analyzing data in Tamale or translating poetry in Teshie. Because the moment we forget the human behind the machine, we risk building machines that forget us entirely.

Let’s innovate with a conscience. Let’s build bots that know how to love, laugh, and maybe just maybe get the waakye order right.